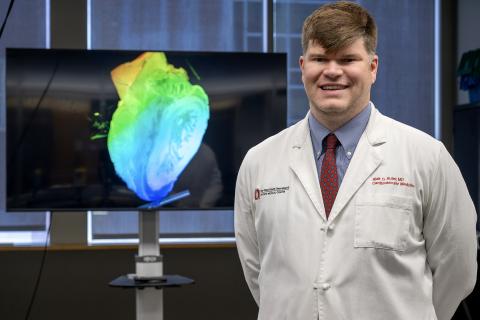

Cardiologist Blair Suter, MD Utilizes New VR Technology for Medical Education

Ohio State cardiologist Dr. Blair Suter is exploring virtual reality for better visualization of medical scans. With syGlass, he’s creating immersive learning experiences for medical trainees that can help improve procedural planning in the future.

Blair Suter, MD is a cardiologist at The Ohio State University Wexner Medical Center that is board-certified in pediatrics, internal medicine and internal medicine/cardiovascular. Earlier this year, Dr. Suter approached the EdTech Incubator (ETI) team to see how its technology could help him better visualize cardiac multi-modality imaging with virtual reality.

The Exploration

As a new faculty member in the division of cardiology, Dr. Suter originally learned about the ETI through the curriculum cohort in Ohio State’s FAME (Faculty Advancement, Mentoring and Education) program. The cohort spent time in the 3D Printing Lab, Virtual Reality Zone and Anatomy Visualization Zone to learn what technology was available and how the free resource can be used by faculty. After the tour and demonstrations, Dr. Suter reached out to the ETI Coordinator, Mo Duncan, for guidance on utilizing the technology.

When asked about his initial motivation for this project exploration, Suter said, “Using Cardiac CT, we look at structural heart disease, including devices implanted in structural and congenital heart disease. Oftentimes, those things are difficult to visualize when you are just getting started, so what we’re looking at here is trying to do 3D modeling in virtual reality to try to make that easier for learners and possibly for clinical application as well.”

The Solution

After hearing about what Dr. Suter wanted to explore, Duncan recommended using syGlass, a new software available for 3D modeling visualization in virtual reality. For his current pilot study, Suter and his cardiac fellow, Omar Latif, MD, are using a patient case where a WATCHMAN device was mal-positioned.

The WATCHMAN is a small device that fits directly into the left atrial appendage (LAA) in the heart and closes it off to prevent blood clots in the location that could lead to a stroke. It’s intended as a one-time surgical solution to allow patients to avoid using blood-thinning medications and the bleeding risks that come with them. However, if the device is mal-positioned, the patient is once again at a higher risk for blood clots and stroke.

Using syGlass, Suter uploaded a patient’s de-identified CT scan and was able to see their heart in 3D while wearing a Varjo XR-3 virtual reality headset. While looking at the scan in 3D, Suter manipulated the image by rotating it and splicing through the heart at various angles for a better look at the device placement. After determining the best visual manipulation to see the device, Suter was able to record a video while in the headset. Learners can watch the video and see what Suter saw in the headset, while also listening to him explain the mal-positioning and how this visualization can be utilized in procedural planning for correcting the placement.

Suter stated, “We’re on the first step, but myself and the cardiology fellow I’m working with, Omar, have talked about all kinds of different cardiac applications that VR modeling can have, in education but clinically as well.”

The Experience

“After the initial time I was here for the demo, I came over here and spent about 30 minutes and was able to manipulate the models in that time with me never having used this before and learning how to use this software and equipment,” said Suter, “This is just my second time using it and I could navigate it with 10 minutes of preparation.”

Suter is currently using his video recording with the CT scan of the WATCHMAN device as a prototype for a much larger vision. He plans to record a series of videos to build a library for medical students, residents and fellows to have immersive learning experiences with cardiac imaging.

Learn more about syGlass in our recent news article: Ohio State Approves syGlass Software for Virtual Reality.